Recent searches

No recent searches

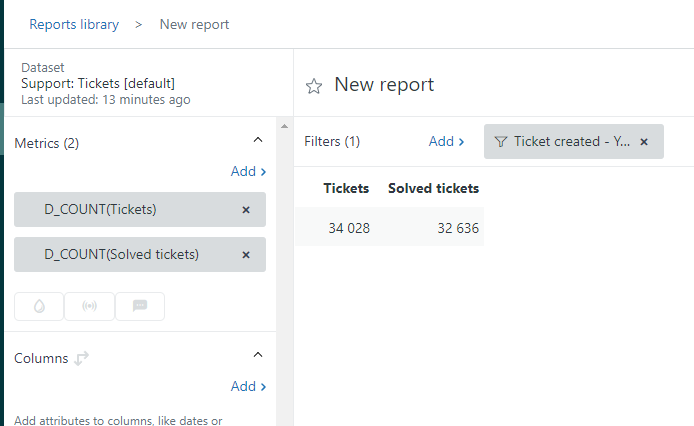

Tickets solved metric discrepancy in Updates history Explore dataset

Posted Jul 29, 2022

I am a little confused about what I'm looking at in explore.

I'm trying to validate the total number of solved tickets per group in my organization.

The article I was pointed to suggested that I use the Support Update History Data Set to see how many tickets were created vs. solved.

However, since I can never really be confident with the data in explore, I went validated this against the Support Ticket Data Set.

As expected, the data doesn't match.

Would someone explain to me why when I am looking at the same information, total tickets created and total tickets solves I have 4 completely different numbers?

2

16

16 comments

Official

Eugene Orman

Nathan Cassella, thank you for sharing this issue with the community. We will add some notes about this behaviour in the recipe you mentioned.

CJ Johnson ZZ Graeme Carmichael, thank you for jumping in to help and investigate this issue.

There are two reasons for the discrepancy between these metrics in the Tickets vs Updates history datasets.

1st reason

The Tickets dataset does not include any deleted tickets but the Updates history does. If you want to remove the deleted tickets from your report you need to use some ticket level attributes in your report, like Ticket status or Ticket group. The deleted tickets will be removed because of the join with the tickets table. If you want to understand the structure of the datasets better refer to this article - Metrics and attributes for Zendesk Support.

2nd reason

In order to capture the unique creation and resolution events we use the field changes data.

We will review the formulas used for the default Updates history metrics at the beginning of 2023 and will update them if there is a better configuration.

Conclusion

0

ZZ Graeme Carmichael

Nathan

I can recreate this.

The metric for Tickets_Solved in the Support Updates History dataset is defined as:

IF ([Changes - Field name]="status"

AND [Changes - Previous value]!="solved"

AND ([Changes - New value]="solved" OR [Changes - New value]="closed")

AND ([Ticket status - Unsorted] = "Solved" OR [Ticket status - Unsorted] = "Closed")

AND [Update - Timestamp]=[Ticket solved - Timestamp])

THEN [Update ID]

ENDIF

For some ticket known to be solved, does not return the ticket ID. It is something to do with the last line on the conditions:

AND [Update - Timestamp]=[Ticket solved - Timestamp]

For some reason this is causing the count to fail.

It may be best for you to reach out to Zendesk support directly.

You can create your own metric as a work around. Replace the last condition with a different way of checking the dates matching and return Ticket ID rather than the Update ID.

IF ([Changes - Field name]="status"

AND [Changes - Previous value]!="solved"

AND ([Changes - New value]="solved" OR [Changes - New value]="closed")

AND ([Ticket status - Unsorted] = "Solved" OR [Ticket status - Unsorted] = "Closed")

AND DATE_DIFF ([Update - Timestamp],[Solved Timestamp],"nb_of_seconds")=0

)

THEN [Ticket ID]

ENDIF

0

CJ Johnson

I can recreate this, too. This is definitely a problem that effects everyone.

Edit: I did some digging and it seems to struggle and not count tickets that were solved more than once, even if the second solve was in the timeframe specified.

1

Nathan Cassella

Eugene Orman

I appreciate you clearing this up.

I have a few suggestions going forward:

1. Use the same logic across all datasets when defining Tickets Created and Tickets Solved: Those numbers are too important to have even a 1% variance.

2. Create a metric in all datasets for deleted tickets: This is a useful metric on its own, but building a custom metric to extract this from the counts is a lot of unnecessary work.

1

CJ Johnson

This answer is not accurate. Please, look into this issue further. I investigated as well, and it was very clear the issue was not deleted tickets for me, but that the Updates dataset incorrectly does not count tickets solved during the time period selected, if they were previously solved. I was also able to write a custom metric that functions correctly and returns the right number on this dataset. The suggested metric would not fix this issue.

I don't understand why Zendesk would leave a bad metric that return misleading, incorrect data in place knowingly. I think it's completely unacceptable for any dataset to be less than 100% accurate. These are metrics that literally impact the hiring and firing of agents. There should be a strong a sense duty here to ensure accuracy.

Edit: If you need further proof, you can recreate this issue with the Ticket Solved filter on the Updates History dataset, "Tickets Created" is not a necessary component to invoke this problem.

1

CJ Johnson

As a follow-up, the suggested workaround is double counting solves if the ticket was solved multiple times. I was suspicious when I saw it was returning *more* solves than the default dataset was.

That's not what the metric "Tickets Solved" should return, as this report is asking for the number of tickets solved, not the number of solves that occurred. You shouldn't use "update_id" as the value returned for this reason.

1

Nathan Cassella

CJ Johnson

I agree with you; my main concern is that any dataset that reflects Tickets Created or Solved needs to use the same calculation, which is not the case. So if I am being told to use the Default Ticket Dataset because that's accurate, then I will use that to get what I need for the presentation to my Executive Leadership Team next week.

Based on the reply, there are two ways to proceed:

1. Use the Ticket default set and the brackets to see updates. This is a workaround to at least see First Time Reply and First Time Resolution, but only IF the logic used to calculate those brackets can be trusted (which I'm not confident in). While we are using that, we hope that Zendesk with actually make the fixes to this, and it doesn't end up sitting the development hell (like the 14 years it took to get Round Robin ticketing into a beta)

2. (And the better option) Get a real reporting system like PowerBI or one of it's competitors and pull that data you want from Zendesk's backend. This is likely where I'll move my organization in the next few years.

0

ZZ Graeme Carmichael

Thanks Eugene

Hopefully, this default metric can be updated.

0

Eugene Orman

Thank you for following up on this issue.

My apologies I forgot to update the THEN clause of the formula. It needs to count the Ticket IDs, not the Update IDs to avoid double-counting. I have updated the calculated metric formula that I suggested above. The formula should end with "THEN [Update ticket ID] ENDIF" not with "THEN [Update ID] ENDIF".

Also, I think I found a way to make the formula more accurate. Please try this formula and let me know if it will return more accurate numbers in your accounts:

-1

Judy Correia

Agreed with some of the above comments re: even 1% variance in data accuracy is concerning, inconsistency in results when metric description (i.e. created, solved) is the same. In addition, I have noticed that data results return from a report differs when report is opened on different days. While some variability may be expected on solved (a ticket can be set to solved and re-opened) but for a metric like created, a ticket has a single created date. When opening a report with this metric with the exact same filters the # of tickets created should be the same. But again, maybe this is due to confusing nature in which the same descriptor can mean different things depending on the data set you are looking at. Correct or incorrect, we actually export our data weekly an report in another system. This ensure we are reporting consistent historical data.

0

Jeremy Pinar

Eugene Orman any news on that early-'23 investigation? We're having similar issues.

1

Julia DiGregorio

Eugene Orman I wish more people knew about this issue. I have been working with support for over 6 weeks on the very same problem and spent countless hours providing them with examples and trying to change the formula behind the metric. Although the formula above helped, it didn't completely solve the problem. We have almost 18,000 solved tickets and 224 had 0 value for the solved metric. With your formula we are now down to 20. However, even 20 is too much when you are working with large clients, not to mention the amount of work needed to fix everyone one of our update history reports, rewrite formulas, etc. There should be a huge disclaimer on the report type - that or the underlying issue should be fixed.

1

Julia DiGregorio

For those looking for an Created, Solved, Pending and Rolling Backlog please see the following post:

https://support.zendesk.com/hc/en-us/community/posts/6539596439322-Report-for-Open-Closed-Not-Solved-and-Backlog

0

Shawna James

0

D.Fitz

+1 for getting this resolved.

I was directed to the above formula by one of your support team and while the above is helpful, I don't understand why you wouldn't just update/fix the default metric.

We've only just uncovered this problem and have too many reports in Explore to update, so we'll now just have to progress with inaccurate data.

0

Julia DiGregorio

CJ Johnson Hello CJ - would you be willing to share the metric you created. I have invested over 100 hours in this issue, and am a bit frustrated. Any help you could provide would be greatly appreciated

1